By 2026, users expect direct, trustworthy answers from Google AI Overviews, Bing Copilot, ChatGPT, and Perplexity—without clicking a dozen links. If your content isn’t answer‑ready, you’re invisible.

This technical playbook explains how answer engines work, what they trust, and the exact page and site patterns that earn citations. You’ll get a 90‑day plan, measurement framework, and a practical pipeline using SEOsolved to move from theory to results.

Why Answer Engines Are Rewriting SEO in 2026

An answer engine surfaces a concise response synthesized by AI, often with citations, instead of showing a page of “10 blue links.” Google’s AI Overviews (formerly SGE), Bing Copilot, Perplexity, and ChatGPT Answers represent this shift toward direct answers, conversational context, and follow‑ups. Studies indicate a steep rise in zero‑click behavior and preference for AI‑enhanced experiences, underscoring why this matters for technical SEO and content strategy (zero‑click and user preference).

What is an answer engine?

Definition: An answer engine uses large language models (LLMs) with retrieval to generate direct, contextual answers to queries—often citing sources—rather than listing links.

Conversational search with follow‑up prompts

Generative summaries that consolidate multiple sources

Confidence‑weighted, safety‑filtered outputs

From 10 blue links to direct answers

Traditional SERPs push users to click; answer engines reduce friction by summarizing. As zero‑click searches exceed 60% and 76% of users prefer AI‑enhanced engines for complex questions, visibility now means being cited inside the answer—not just ranking below it (source).

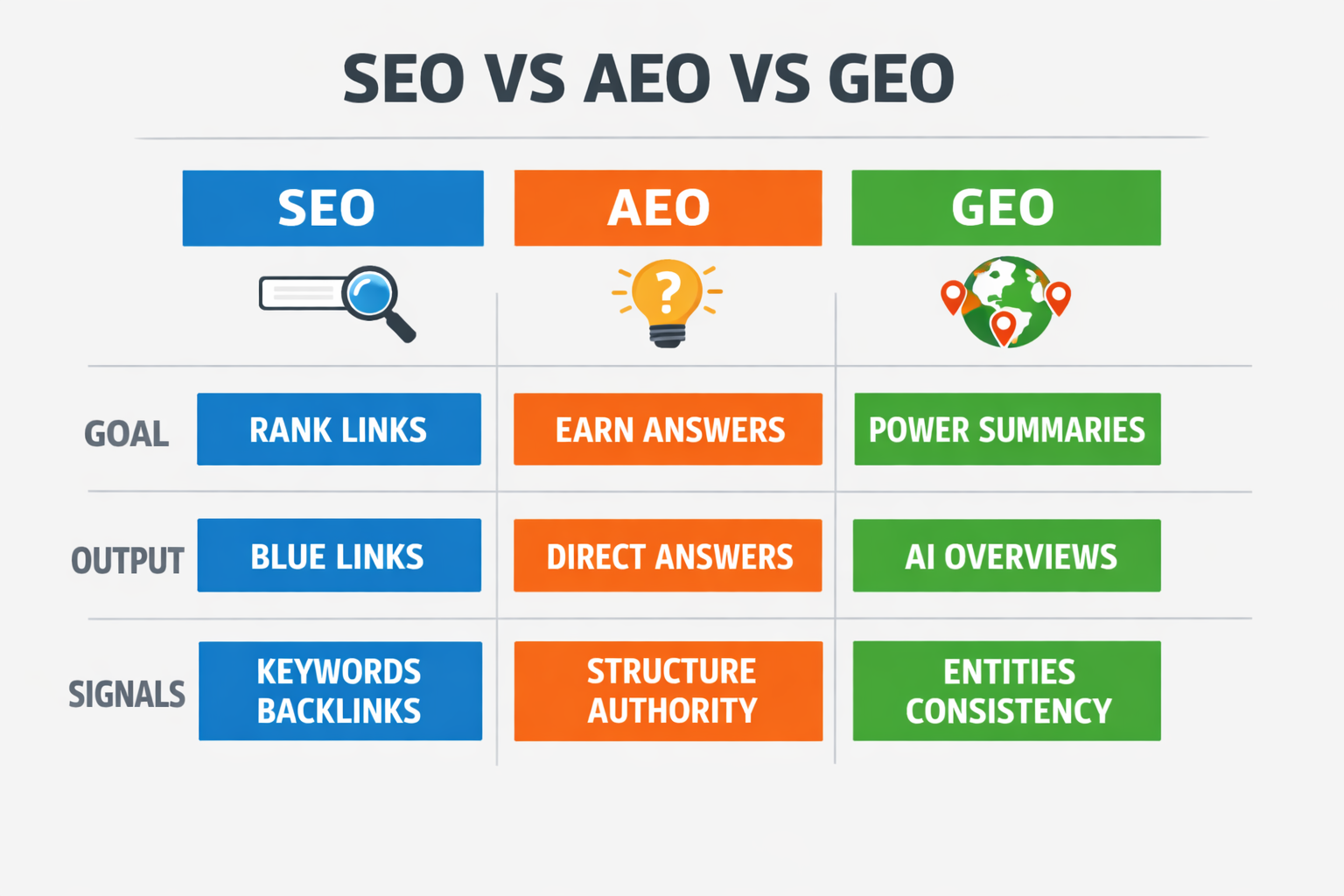

SEO | AEO | GEO |

|---|---|---|

Optimize to rank links in SERPs | Optimize to earn direct answers and citations | Optimize for accurate inclusion in AI summaries |

Keywords, backlinks, on‑page signals | Structure, authority, clarity, source trust | Entity coverage, consistency, provenance |

SEO, AEO, and GEO should be unified in one strategy, not treated as silos (overview) (integration).

Where answers come from (LLMs, retrieval, citations)

Answer engines mix LLMs, vector retrieval, and citations. A typical flow: ingest documents → embed passages → retrieve relevant chunks → re‑rank → generate an answer with citations. Unlike deterministic SERP rankings, this pipeline is probabilistic and shaped by model reasoning, conversation history, and dynamic data (primer). Engines prefer sources that are easy to summarize safely and accurately (selection factors).

The AI and ML Mechanics SEOs Must Understand

To optimize for answer engines, teams need a working model of embeddings, vector similarity, retrieval, and generation. This section highlights the mechanics that inform your on‑page and technical decisions.

LLMs, tokens, and context windows

Token limits constrain how much of your page a model can “see.” Put the answer up top and repeat key entities in section summaries.

Summarization compresses long pages; predictable structure reduces truncation risk.

Formatting like definition boxes, bullet lists, and short paragraphs increase extractability.

Embeddings and vector similarity

Embeddings transform text into vectors so engines can match queries to semantically similar passages via cosine similarity. Optimize for passage‑level relevance by using crisp subheadings, answer‑first paragraphs, and entity‑rich phrasing that reduces ambiguity.

Retrieval-Augmented Generation (RAG) and re-rankers

Most answer engines use a hybrid retrieval stack: BM25 and dense vector search, followed by re‑ranking to choose the best few passages. The generator then crafts an answer and cites sources. Winning content is thus:

Retrievable (clear entities and structure)

Re‑rankable (unique, authoritative, concise)

Generatable (safe to summarize with verifiable claims)

How Answer Engines Evaluate and Trust Content

Beyond classic ranking factors, engines weigh entity clarity, author credentials, citation quality, and freshness. E‑E‑A‑T remains central—especially for sensitive topics—so document experience and authorship clearly (E‑E‑A‑T for AEO).

Entity-centric relevance and knowledge graphs

Disambiguation drives inclusion. Use schema.org types, explicit entity linking, and consistent naming to map into the Knowledge Graph. Organize content into clusters that reinforce topical authority and clarify relationships between entities.

Source authority, citations, and link context

Answer engines favor sources with strong provenance. Cite authoritative references and use descriptive outbound link context. AEO is about making your page the safest, most quotable source for a concise answer (AEO definition) (how answer engines work).

Freshness and change velocity

Engines track recency and update depth. Publish meaningful updates (data, steps, screenshots) and maintain steady change velocity to increase re‑crawl priority and temporal relevance.

Structuring Pages to Earn Direct Answers

Structure determines whether your content is easy to extract. Use patterns that answer engines can summarize safely, with support and provenance.

Lead with the answer (then explain)

TL;DR: Start each section with a single‑sentence answer or definition, then elaborate. Add a small executive summary box at the top of pages.

Cluster related questions on-page

Group 6–10 closely related FAQs under each subtopic.

Include natural follow‑ups a user might ask in chat.

Cross‑link to hub pages to reinforce coverage. For example, see our guide on Semantic SEO and topical authority.

Reinforce with structured data

Implement FAQPage, HowTo, and Article schema in JSON‑LD.

Use breadcrumbs and @id anchors for clean entity references.

Include source citations in‑text and in a references section.

Technical SEO That Supports Answer Inclusion

Engines can’t quote what they can’t crawl, render, or trust. Ensure pristine technical health so your content is ingestible and stable.

Crawlability and renderability

Audit robots.txt, status codes, and blocked assets.

Prefer SSR/SSG or hydration that outputs complete HTML.

Use dynamic rendering only as a last resort with parity checks.

Deep dive: Technical SEO That Works: 2026 Best Practices.

Performance and stability

Hit Core Web Vitals: fast LCP, stable CLS, responsive INP.

Optimize images, cache aggressively, and minimize script bloat.

Monitor render errors and long tasks in field data.

Canonicalization and duplication control

Consolidate variants with canonical and hreflang.

Manage pagination and parameter chaos.

Deduplicate near‑identical pages to reduce retrieval confusion.

Data, Schema, and Entity Hygiene

Standardize entity signals site‑wide so engines can attribute, disambiguate, and cite you confidently.

Organization, Author, and About signals

Use Organization and Person schema for ownership and expertise.

Publish an About page, editorial policy, and author bios with credentials.

Include contact and physical presence details where relevant.

Breadcrumbs and content types

Implement BreadcrumbList and consistent category taxonomy.

Use precise types: Article, BlogPosting, HowTo, FAQPage.

Link related entities between hubs and spokes to boost graph connectivity.

Consistent identifiers and SameAs

Assign stable @id URLs to key entities.

Use sameAs to connect to Wikidata and social profiles.

Keep names, logos, and descriptions consistent across platforms.

AI-Driven Internal Linking and Clustering

AI methods can group keywords and automate contextual links to amplify topical hubs and passage discoverability.

NLP-driven keyword clustering

Use embeddings and cluster analysis to group queries by intent and semantics. This informs hub‑and‑spoke architecture and eliminates cannibalization. Learn more in Machine Learning for SEO: 7 Workflows.

Automating internal links responsibly

Set rules for anchor text, proximity, and link caps per section. Measure link velocity and ensure new pages get context links from high‑authority hubs. See our guide on Automated Internal Linking.

Anchor patterns that help answer extraction

Use question anchors that mirror user phrasing.

Include entity anchors for brands, models, and standards.

Favor semantic anchors (“what is…”, “how to…”, “vs”).

Building an Answer-Engine-Ready Pipeline with SEOsolved

Problem: Teams struggle to identify high‑intent topics, plan entity coverage, and produce sourced, answer‑ready articles at scale.

Solution with SEOsolved: The platform analyzes competitors, discovers hundreds of keywords, builds a tailored content roadmap, and generates SEO‑optimized articles with credible sources—often in under 30 minutes.

Competitor analysis to find content gaps and opportunities.

Keyword discovery with clustering by intent and entity.

Roadmap generation that sequences hub‑and‑spoke publishing.

Article generation with citations and on‑page schema hints.

Micro‑example: A B2B SaaS team used SEOsolved to publish 12 answer‑first guides in four weeks; within 60 days, they earned citations in Perplexity for three target topics and saw a 28% lift in branded assistant mentions.

Prompting and Editorial Guardrails for AI Content

To minimize hallucinations and maximize citations, adopt source‑first prompts and human QA.

Source-first prompts that earn citations

Use a citation‑first template: “Cite 2–3 authoritative sources for each claim; prefer primary data; include source name + year.” Encourage neutral phrasing and explicit attributions early in the article. See guidance on how answer engines rely on structured data and authoritative sources (differences) (overview).

Validation layers and human-in-the-loop

Automated checks: link status, schema validation, plagiarism scan.

Editorial QA: fact‑check claims, verify quotes, mitigate bias.

Safety review: remove PII, avoid unsafe recommendations.

Style, tone, and reading level

Use a consistent style guide with examples of answer‑first paragraphs.

Target an accessible reading level with short sentences and lists.

Standardize terminology for entities, models, and product names.

Measuring Performance Beyond Traditional SERPs

Track how often you appear in AI Overviews and assistants, which answers cite you, and whether those exposures drive conversions.

Track AI Overviews and generative appearances

Monitor impressions and placements in AI Overviews/SGE‑like modules.

Log which queries produce a generative card and whether you’re cited.

Use click‑through proxies (e.g., CTA CTR in the module context).

Assess share of answer across assistants

Sample target queries in ChatGPT, Bing Copilot, and Perplexity.

Measure brand inclusion rate, citation frequency, and position.

Track branded assistant mentions over time as share‑of‑voice.

Tie to business outcomes

Attribute assisted conversions to answer‑engine exposures.

Measure dwell time, CTA CTR, and conversion rate on cited pages.

Pair analytics with log analysis to connect crawl, render, and revenue.

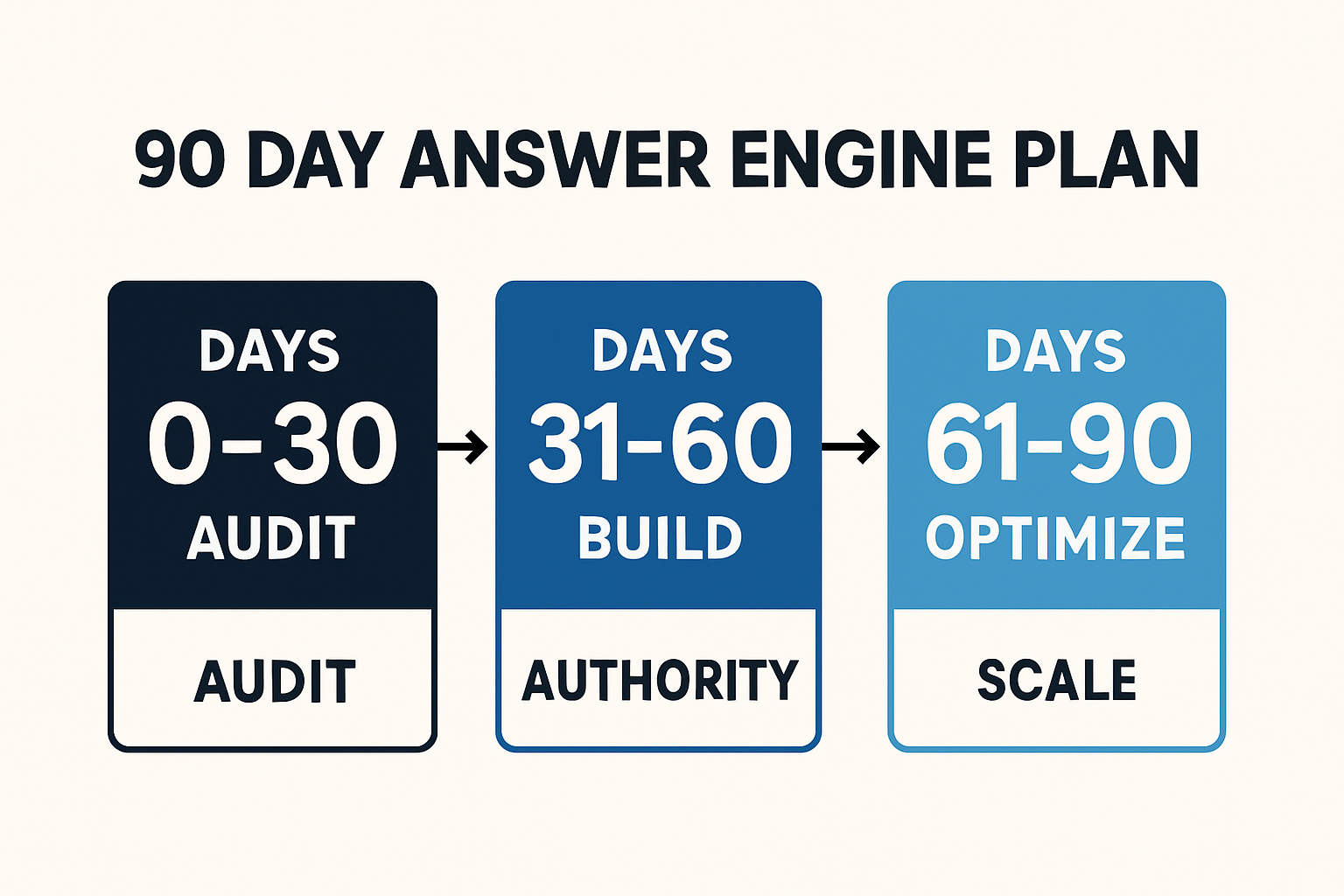

Blueprint: A 90-Day Answer Engine Action Plan

Follow this sequence to move from audits to answer‑ready scale. Use it as a sprint‑planning checklist.

Days 0–30: Audit and prototype

Run content and technical audits; map entities and gaps.

Define 3–5 clusters with answer‑first templates and schema.

Publish 5 pilot pages; validate retrieval with passage testing.

Baseline KPIs: AI Overview visibility and share of answer.

Days 31–60: Build topical authority

Expand spokes; interlink hubs and FAQs at scale.

Deploy Organization/Author, Article, FAQPage schema site‑wide.

Strengthen external citations and outbound link context.

Improve Core Web Vitals and fix render blockers.

Days 61–90: Optimize and scale

Refresh content with new data and examples; note change logs.

Iterate prompts, anchors, and headings based on answer tests.

Automate internal linking and cluster monitoring.

Scale production with SEOsolved’s roadmap and generation.

Risks, Compliance, and Technical Safeguards

Answer‑engine visibility is worthless if it creates legal, ethical, or privacy risk. Build safeguards into your pipeline.

Citations, licensing, and fair use

Prefer primary sources and licensed assets; quote minimally.

Attribute clearly with author, source, and date.

Retain links to originals to preserve context and provenance.

Bias, toxicity, and quality filters

Use bias‑mitigation checks and toxicity filters in editorial QA.

Avoid over‑generalizations; represent counter‑evidence when relevant.

Flag risky topics for additional human review.

Privacy and data minimization

Strip PII from prompts, drafts, and logs.

Limit retention windows; enforce role‑based access.

Document data flows for compliance and audits.

Tooling and Stack Considerations

Connect research, production, and measurement so you can iterate quickly and safely.

CMS workflows for scalable publishing

Use a headless CMS with components for answer‑first snippets and schema injection.

Template FAQs, TL;DR, and citation blocks for consistency.

Version content and store change notes for freshness signals.

Analytics and log-based insights

Analyze logs for crawl stats, render parity, and error patterns.

Track engagement metrics and event funnels tied to CTAs.

Correlate assistant mentions with on‑site conversions.

Lightweight RAG prototypes for content testing

Create a small vector index of your content to simulate retrieval and re‑ranking. Compare which passages win and rewrite weak sections. For broader strategy across AI surfaces, see Generative Engine Optimization (GEO).

Action Checklist and Next Steps

10 high-impact technical and content actions

Add an answer‑first paragraph and TL;DR to top pages.

Implement Organization, Person, Article, and FAQPage schema.

Group FAQs by intent; add follow‑up prompts.

Cite two authoritative sources per key claim.

Reduce CLS/INP and ensure SSR/SSG HTML parity.

Standardize entity names and sameAs identifiers.

Automate internal links to hubs with contextual anchors.

Track AI Overview visibility and assistant citations.

Refresh high‑value pages quarterly with new data.

Use SEOsolved for roadmap + rapid sourced article generation.

Accelerate with SEOsolved

Stop guessing what to publish and start shipping answer‑ready content. SEOsolved unifies competitor analysis, keyword discovery, entity‑mapped roadmaps, and fast article generation with credible sources—so you can rank on Google, ChatGPT, and beyond.

FAQ

What is an answer engine?

An AI system that generates direct answers—often with citations—by retrieving and synthesizing content from multiple sources.

How is AEO different from SEO?

SEO ranks links in SERPs; AEO earns inclusion and citations inside AI answers and summaries.

What signals matter most for answer inclusion?

Entity clarity, E‑E‑A‑T, structured data, clean technical SEO, authoritative citations, and freshness.

How do I measure success in AI Overviews?

Track generative appearances, citation frequency, share of answer, CTA CTR, and assisted conversions.

Can I scale answer‑ready content safely?

Yes—use source‑first prompts, human QA, schema, and a platform like SEOsolved to publish quickly with citations.